It’s weird: the artificial intelligence is my biggest project right now, the thing I’m most excited about. I’m putting in lots of work and making lots of progress. Yet I’ve barely mentioned it on the blog for the past few months.

Why?

Partly because my AI work these days is…abstract. The code I’m writing now lays the groundwork for cool, gee-whiz features later, but there isn’t a lot of “real” stuff yet that I can demo, or describe.

I could tell you about the abstract stuff, but I avoid that for two reasons. First, because I don’t want to bore you.

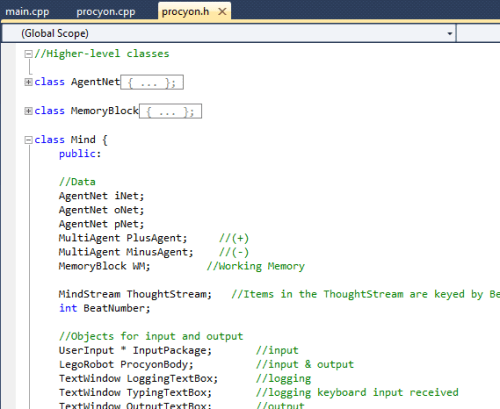

And second, because – crazy as it sounds – I think this thing might actually work. If it does, it could be dangerous in the wrong hands. So I want to keep the details secret for now. (The code snapshot above is real, but – I think – not especially helpful. Yes, I am actually paranoid enough to think about things like that.)

Having said all that, I can tell you a few things.

For starters, I really am working hard. I put in an hour and a half every day (or drop it to a half hour sometimes when my schedule’s tight). I’ve written over 5,000 lines of C++ and over 1,000 lines of C#, and created a database with 13 tables and 43 stored procedures (to say nothing of the XAML, Javascript, and Ruby components). I’ve filled two notebooks with designs and ideas. Not that those numbers are astoundingly high, but the code works, and I’m revising it constantly.

And as much time as I’ve spent on theory, design, and groundwork, the current program does actually do some fairly cool things. For instance…

- You type to it, and it types back.

- You can make it recognize arbitrary new commands. No hard-coding required, just click a few buttons in the MindBuilder interface and drop the new agents into the database.

- It takes as input, not merely a stream of typed characters, but a stream of moments. So it can recognize words, but it can also notice if you pause while typing.

- Right now, inputs are keyboard and robot sensors, outputs are text and robot action. But the framework is completely flexible. Any new input or output I want to add – a camera, a thermometer, whatever – it’s just a matter of writing the interface. The underlying architecture doesn’t change at all.

- It can recognize words, phrases, sentences, even parts of speech. It can respond differently to later commands based on something you told it earlier. And likewise, none of this is hardcoded, so the exact same mechanism that recognizes a written phrase could also recognize a tune, or Morse code.

The history of AI is littered with the ashes of hubris. So although I’m still wrapped up in the joy of hubris today, I’m well aware it’s a delusion. I can honestly say that the path to a strong AI seems fairly clear, that I don’t see any major obstacles that will prevent me from creating a thinking machine. Yet I know the obstacles are surely there, and I’ll see them soon enough.

Still, it’s exciting.

Any questions?